An analysis of the Institute’s Academic Regulations

by Rishit Shah (2023)

As students, we follow the rules and guidelines of the university and campus, for both academic and residential purposes. A lot of these are basic rules, to maintain the harmony on campus, and are not under study in this article. Here, the aim is to cover the academic rules which we follow, as set out by AUGSD (the Academic Undergraduate Studies Division).

This is a dive into the Academic Regulations of our university, specifically the 4th Section, titled Teaching and Evaluation. The aforementioned regulations, which I will now refer to as the AR, are readily available on the institute’s website, and were last updated in 2023. These regulations cover every course offered in BITS Pilani, whether it be FD, HD, or PhD.

Section 4.04 from the AR:

“Within one week of the beginning of classwork, the instructor-in-charge/instructor must announce to his/her class/section through a hand-out, the necessary information in respect of (i) the operations of the course (its pace, coverage and level of treatment, textbooks and other reading assignments, home tasks etc.); (ii) various components of evaluation, such as tutorials, laboratory exercises, home assignment, several quizzes/tests/examinations (announced or unannounced, open book or closed book), regularity of attendance, etc., (iii) the frequency, duration, tentative schedule, relative weightage etc. of these various components; (iv) the broad policy which governs decisions about make-up; (v) mid-semester grading; (vi) grading procedure (overall basis, review of border line cases, effect of class average, etc.) and (vii) other matters found desirable and relevant.”

Section 4.03 from the AR:

“… While recognising variations due to personal attitudes and styles, it is important that these are smoothened out so that the operation and grading in the different sections in a course, indeed between courses across the Institute; are free from any seeming arbitrariness.”

Section 4.03 and 4.04 form the basis of the rest of the article, where the discrepancy and ambiguity of the information in handouts is discussed in detail. The handout is the map, chart, and scale of any course which we take in the institute. These documents are approved by the DCA (Departmental Committee of Academics) of the respective departments, and AUGSD before being given to the students. It is also fact that arbitrariness exists prevalently in multiple sections which the handout is meant to clarify – namely surprise evaluations, classroom participation, and grading. The student body will agree that higher clarity on how the grades are being awarded to us would be very beneficial to our academic pursuits and well-being.

On top of the AR, on April 2, 2025, all the faculty and students of the four engineering campuses of BITS Pilani received an email from the institute-wide Dean of AUGSD, titled Guidelines for Evaluation and Assessment, dated 31st March 2025. This document contains the sentence “To ensure consistency, fairness, and transparency in the evaluation and assessment process across all campuses, the following guidelines must be adhered to while designing and conducting assessments in your courses.”

It is clear that these guidelines were meant to lay out the rules to further standardize and clarify how students are to be evaluated. They also empower the students to escalate the issues we face to a higher grievance committee, incase our courses do not comply with the measures listed out here. The purpose here is to build trust in the system, and it does that on paper. The checks and balances of the DCA and AUGSD are meant to ensure that students are governed via rules, and not the individual personalities of instructors.

Since then, about 9 full months, and 1.5 semesters have passed, and yet, the document which was mailed to us has not been implemented in its entirety. The following part of the article presents the sections, and their concerned contents in bold:

Section 1: Evaluation Criteria and Handouts

“Evaluation Components and Assessment Schedule – The course handout must clearly outline all evaluative components, including their weightage, marks distribution, and, where applicable, scheduled dates. It should specify the assessment methods, such as tutorials, laboratory exercises, home assignments, and quizzes/tests/examinations (both announced and unannounced, open-book or closed-book), along with their frequency, duration, and tentative schedule.

Mid-Semester Grading – A clear explanation of how mid-semester grades will be determined, specifying the components included and their respective contributions.

Grading Procedure – The overall grading approach, including considerations for borderline cases, the impact of class averages, and any review mechanisms.”

The three points outlined here are largely absent, incomplete, or ambiguous in the handouts which we receive. The frequencies of unannounced components, the breakdown of the weightage for mid-semester grading, and grading procedures are ignored in most of the handouts. It can be observed that grading in general is a random experience across courses, departments, and semesters.

While the grading in BITS can warrant a separate article of its own, for this piece, our professors need to highlight their approach towards how they view the curve distributions. The class mean is a good place to start, and the discrepancy of the grades awarded at the mean range from B to C (8 to 6 grade points). Without the data, the system is completely opaque to us, despite some meagre promises by the previous director to reveal how the grades are awarded. Some professors and departments tend to be more strict, and this manifests problems across the board. While all departments cannot realistically be equal, a framework where the students are also involved (even as viewers) should be implemented, instead of it being hidden behind an Iron Curtain.

Section 4: Surprise Evaluations:

“If the Instructor-in-charge/instructor plans to have surprise assessments as a part of the evaluation scheme, it should not exceed 10% of the total evaluation component. It is highly recommended that built-in makeup, such as the best N-2 out of N, be incorporated as part of it. It is also expected to span the surprise components throughout the semester, and it is recommended that the aim should mainly be to improve the understanding of the course rather than measure regularity.”

“…While regular attendance of students is strongly encouraged, instructors and instructors-in-charge should refrain from assigning direct marks for attendance. However, attendance may be considered as a distinguishing factor when determining grades for students with identical scores who are on the borderline between two grades.”

Another issue are the ET/attendance components, where the ICs of the courses have a large degree of freedom, with rather arbitrary decisions. Some departments (like EEE), keep very few courses with these components, while others (like Chemistry) might have more courses with ETs. When these tests are kept, there is ambiguity in the number of tests and buffers which the instructors will keep throughout the semester. There are many instances where there is no clarity about the number of ETs throughout the semester, since the instructor does not divulge that information even verbally during classes. It is indeed rare to see this being written down in the handout to be set in stone.

Exit tests are also kept at times which are rather busy for students, like just before midsems. Consecutive exit tests make little sense, since the content covered between 2 ETs is minimal. These tests evaluate us for our “regularity” rather than understanding of the course, going against the words of the regulations. Haphazard implementation of these measures deserve another article, with numerous issues like disparity of difficulties across sections, among others.

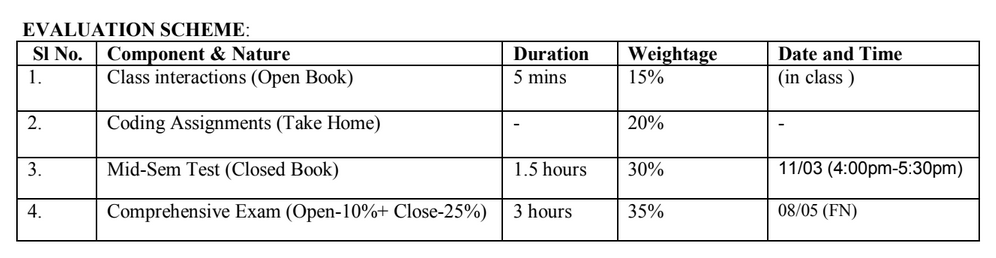

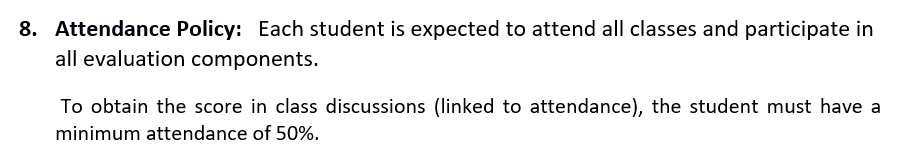

There are courses even in the second semester of 2025-26 where the attendance component is counting for 15%, which is a clear and direct violation of Section 4 of the document.

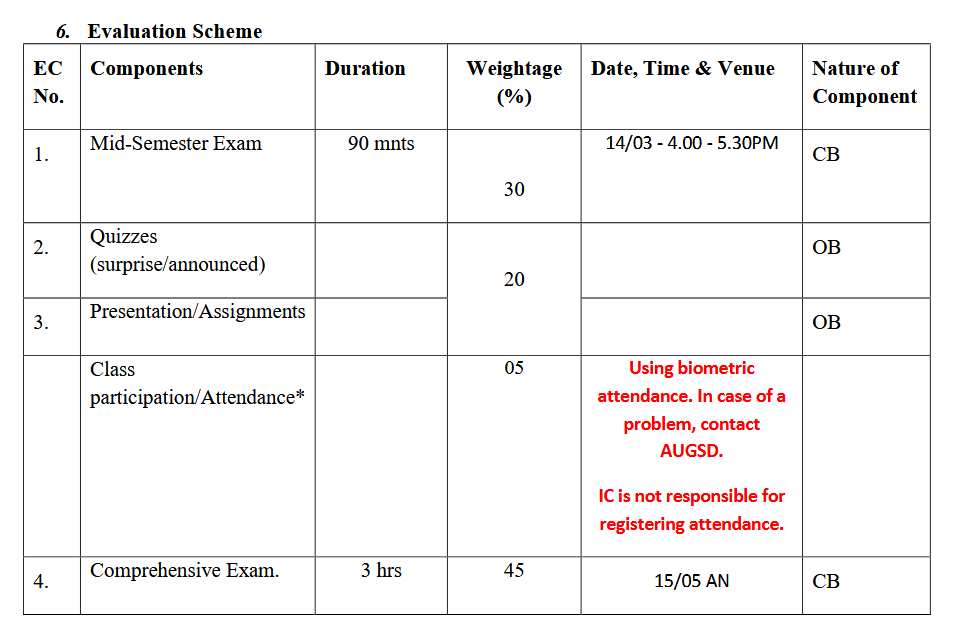

Section 4 is rendered less effective when we see policies that the marks for these tests will be awarded only if the student has biometric attendance of some X% (like 50) for the lectures. There were multiple courses which did this in the first semester of 2025-26, which was well after the directive was dated (31st March 2025). A few of these courses repealed the policy when the PCTs were being released due to widespread issues in the implementation. Handout revisions were seen for courses like EMT and M2, to remove the attendance wall, but it was too late – students were forced to attend classes in the section they registered, with little freedom to switch lecturers. How does a move where the class participation marks are hidden behind a wall of attendance even make sense?

Official policy discourages the instructors from keeping direct marks for attendance, and rather award the students via tests conducted during lectures or tutorials. A few courses in both the semesters of 2025-26 were/are directly violating this rule. Their handouts directly spell out marks for attendance, or the professors take up attendance on paper instead of quizzes (as declared in those handouts).

Last semester, some students were told to approach AUGSD if they had any issues with the direct attendance component by their course IC. It is unknown if someone approached the C-Block office, trying to explain the issues with the evaluation scheme. The professor claimed that the handout was already approved by AUGSD, so students thought, what is the point? The same policies are continued this semester.

The blatant direct contradiction of the rules should be stopped by AUGSD, as it goes against their own instructions. The system should reconsider their mechanisms to award marks for classroom participation. An institute like ours, priding itself for academic rigour, should not simply award marks for ones presence in the classroom, not even checking the learning goals and retention of the lecture content.

The freshers were informed of a large grading change late into the semester, after attendance-linked evaluation had already influenced behaviour. One of their 1-credit courses was converted to not count towards their CGPA, but the switch happened when the semester was almost over.

The overlying problem here is how the current implementation of exit tests, and the attendance factors – like gates to marks or direct marks, defeats the purpose of the regulations, and also the learning outcomes of any course.

Section 9: Makeup Tests

“If a student provides valid documents certified by the concerned authorities (institute’s CMO in case of medical and Associate Dean in other cases), the IC must arrange a makeup test.”

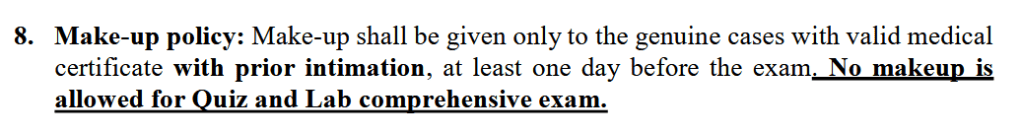

Here the lab compre is worth 20 percent of the course total

The lab compre and quizzes are worth 20 percent of the course total

Many courses flat-out refuse makeups for quizzes and lab evaluatives even in the absence of a best-of policy. It might be hectic to keep makeups for quizzes, but many courses have kept makeups for both quizzes in the past. Our request here should be to make the policy enforcement uniform and fair – especially if someone misses a quiz due to medical reasons.

Section 10:

Mentions that the faculty should use LMS to maintain uniformity, and not use different platforms. This is a small issue in the light of all of the above, but students have to hop between LMS, Google Classroom, Piazza, rt-moodle etc. in order to access the course resources. The institute should ideally make LMS capable enough to handle the entire lot of features of the other websites which make it convenient for the instructors to disseminate and collect information.

Section 11:

“To ensure student privacy, individual marks/grades should not be shared with the entire class. Instead, grades must be uploaded and accessed through institute-approved Learning Management Systems (LMS)”

The implementation of these measures have already taken place for a few courses. The MPI course, with around 800 registered students, successfully maintained individual privacy with marks being sent directly to students throughout the semester, as soon as the document was mailed. A ranklist with only the sorted marks was also sent so that students could be satisfied with some level of transparency in the grading process. In the presence of such solutions only a few instructors follow the measures. The institute should strive to make all instructors aware of the rules, and prohibit them from releasing public spreadsheets with the scores from each evaluative component. This can be a very helpful step in increasing the general well-being of students in our academic pursuits. [To quote my favourite movie here – “Grades create divide”, wise words from 3 idiots]

Section 14:

“The DCA should ensure that these guidelines are clearly outlined in the course handout and are consistently implemented by the Instructor-in-Charge and other instructors in their respective departments.”

Section 15:

“If a student has exhausted all options of addressing concerns with his/her course Instructor- in-Charge, Head of the Department, or Associate Dean (AUGSD/AGSRD), they may escalate the matter to the University Leadership by emailing academic.grievances@bits-pilani.ac.in with relevant supporting documents.”

Section 14 and 15 outline the responsibilities of the Departmental Committee of Academics (DCA), and how their oversight is supposed to prevent instructors from deviating from this document. The handouts which are released to us students are generally approved by the DCA and AUGSD before being uploaded on LMS/Classroom. The question to be asked here should occur naturally to all of us – where are these rules being implemented? Direct marks for attendance are being awarded. The number of exit tests and their makeups (if any) are rarely declared inside a handout. Handouts are changed through the semester. Surprise evaluations still count for more than 10% of the course total.

Who pays the price for all of these lapses in the policing of courses? The learning experience of students. We start attending classes not for knowledge, but just for marks. Someone has to attend a quiz while being sick, instead of resting and healing.

The oversight over ICs is either ineffective or inconsistent, if such evaluations still go on, over 9 months from the email. The aim of this article is not to point fingers at individual professors, but to ask for collective improvement across evaluations. Maintaining faculty autonomy hand-in-hand with the measures might not be an easy solution, but the guidelines are meant to be followed by both the faculty and students. We deserve predictability and transparency. Rules which only exist without complete implementation are no longer safeguards, but become sources of anxiety. What we need now, is a solution where the guidelines are implemented with consistency, where the cost of lapses is not borne by the students.

Academic Regulations

Guidelines for Evaluation and Assessment

Leave a comment